ICS 496 Capstone - Training AI models to Read 19th Century Archival Documents

Training AI Models to Read 19th Century Archival Documents

Purpose

The goal of our project is to utilize Artificial Intelligence and Machine Learning to accurately transcribe historical, handwritten documents. The timeline of our project will include determining which tools and approach will best achieve this goal.

Week 1

For our initial meeting and consultation the project sponsor, we worked to solidify the scope of work that was to be accomplished.

There were many questions that would have to be answered before we could fully determine the scope of work, and whether that work would be achievable within our timeline. Despite what would seem like an easy task, there were many questions that needed to be answered:

- Are there legal implications for uploading archival documents onto open or subscription AI transcribing services?

- Would the Artificial Intelligence models be easier to train down to a specific author? Or would the models be better suited for covering the vernacular and styles of a given time period?

- What products are out there currently that can best achieve our goal?

Week 2

For the second week, we dove into the research of several applications and how they could be useful in accomplishing our goal. One example is Transkribus, which is a popular platform for transcribing archival documents from photos. *** Of note, transcribing is the act of turning audio or video into type. Technically, the term for converting cursive handwriting to type text is referred to as “translating.” Due to how awkward that sounds, we will stick to using the term “transcribing” to define the conversion of handwritten cursive into typed text. ***

Transkribus

Transkribus.org is one of the front-running apps used for transcribing documents from handwriting to searchable typed text. One of our tasks was to first understand and present the legal and privacy rules that the app operates under. The goal of this is to ensure that there are no hidden agendas or nefarious uses of the data that is being uploaded to the application’s servers.

The Transkribus project operates as a CO-OP. The statues of that CO-OP describe their mission statement but make no mention of the legal ownership or use of the documents that are uploaded. There are operational processes, member rights, and cooperative functions that are mentioned. This document specifically does not mention the Intellectual Property rights over the data or documents uploaded to their system.

The next document reviewed was the Privacy Policy. The Privacy Policy does describe how the data is handled and stored. Namely:

- READ-COOP collects data transferred to Transkribus, including uploaded images, recognized text, ground truth data, trained recognition models, and metadata.

- The data is hosted on servers in Germany and Austria.

- Users maintain control over the data they upload. The policy states that the data must not contain Personal Data unless the user signs a Data Processing Addendum.

- Uploaded data is temporarily stored only for the duration necessary to complete text recognition and is deleted promptly thereafter.

Finally, the General Terms and Conditions more expressly document the relationship between user and platform and we surmise the following bullet points:

- READ-COOP stores uploaded material only to the extent necessary to provide its services and improve its products. The platform does not guarantee permanent storage unless otherwise agreed upon.

- Documents submitted via Transkribus or APIs are stored only temporarily for processing purposes. For APIs, uploaded data is automatically deleted shortly after processing is completed.

- Ownership: Users retain ownership of all uploaded content, including handwritten material, processed results, and training data.

- Licensing: Users grant READ-COOP a limited, non-exclusive, worldwide, royalty-free license to store, modify, and process user content for the sole purpose of providing and improving its services.

- Users maintain all intellectual property rights for their material and processed outputs unless explicitly stated otherwise.

- The policy explicitly states that "User Content remains yours," reaffirming user ownership over scanned and uploaded documents.

- Users can share custom-trained models with others via the platform, with two sharing options:

o With Training Data- Makes both the model and the underlying training data public.

o Without Training Data- Only the model is shared, and the training data is kept private. - Users can withdraw consent for sharing at any time.

Handwriting OCR

Another AI transcript app that we found during our research is Handwriting OCR.

According to their website frequently asked questions page, "Handwriting OCR is a document automation service that specialises in digitizing documents containing handwriting. It uses a form of Optical Character Recognition (OCR) developed especially for reading handwriting (Source)."

Highlights about Handwriting OCR:

- Supports a wide range of file formats such as PDF, JPG, PNG, GIFT, HEIC, and TIFF.

- Supports multiple languages for processing documents.

- AI Models are pre-trained on a diverse set of public domain and licensed datasets to ensure privacy and confidentiality of user data.

- Handwriting OCR has a comprehensive API that allows users to integrate their services directly into applications.

In terms of the legal and privacy rules that Handwriting OCR operates under, here are some key points we found during our research (Source):

- Handwriting OCR have explicitly stated that any information uploaded to their services belongs to the users and that they only use the user's uploaded data to deliver their OCR services.

- Users have full control over and data that is uploaded to their services. Users are able to delete data at any time. Handwriting OCR will automatically delete processed documents after 7 days by default but if the user wants to either shorten or lengthen the time data is deleted after processing, they are able to adjust that as well. Once data is deleted, it is immediately and permanently removed from Handwriting OCR systems.

- Handwriting OCR will only retain user uploaded data as long as necessary to provide their services, emphasizing that users can delete their data at any time.

- Any data that is in transit and stored uses industry-standard encryption, implementing rigorous access controls and security protocols. Any non-EU customer data is stored in the US.

- Handwriting OCR is also in compliance with several data protection standards and regulations such as GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act).

- If a user requests support regarding any of their documents and grants Handwriting OCR permission to view and access their documents, only then will Handwriting OCR view and access a users documents.

Pen2Txt

Per their website, Pen2Txt is a relatively new Software as a Service (SaaS) platform that uses HTR to convert handwritten documents into digital texts.

Offerings

- Several image formats are supported, including JPG and PNG (but notably, not PDF)

- 1 credit = 1 page. Their highest pricing option is 49.90€ (~$51.89) per month which offers 500 credits per month.

- Data collection is in accordance with French law No. 78-17 of January 6, 1978 relating to computers, files, and freedoms: “Under the Data Protection Act of January 6, 1978, the User has the right to access, rectify, delete and oppose their personal data. The User exercises this right: via a contact form, via their personal space.”

- Intellectual Property: “The User must seek the prior permission of the site for any reproduction, publication, copy of various contents. They commit to using the contents of the site in a strictly private setting, any use for commercial and advertising purposes is strictly prohibited.”

- The Client chooses a subscription corresponding to the number of monthly credits they will benefit from for the recognition of handwritten documents. 1 credit = 1 page.

- Provision of Services: “In the absence of reservations or claims expressly issued by the Client upon receipt of the Services, they will be deemed to conform to the order, in quantity and quality. The Client will have a period of 1 month from the provision of the Services to issue complaints by Mail to [contact@pen2txt.com](mailto:contact@pen2txt.com), with all the relevant justifications, to the Service Provider.”

Further Considerations

- Does not offer API integration

- Source of training data is not publicly available

- Smaller company with limited background information

Sources

- Privacy policy. 08 November 2024. https://legal.transkribus.org/privacy

- “Statutes of the READ-COOP SCE with limited liability.”2022. Accessed 27 January 2025. https://help.transkribus.org/hubfs/Statutes_READ_COOP_SCE_current.pdf

- General Terms and Conditions. 2024. Accessed: 27 January 2025. https://legal.transkribus.org/terms.

- Handwriting OCR Frequently Asked Questions Page. 2025. Accessed: 29 January 2025. https://www.handwritingocr.com/#faq.

- Handwriting OCR Privacy Policy. 2025. Accessed: 29 January 2025. https://www.handwritingocr.com/privacy.

- Pen2Txt General Terms of Use. 01 January 2024. Accessed: 30 January 2025. .https://pen2txt.com/cgu

- Pen2Txt General Terms and Condition of Sale-Internet. 01 January 2024. Accessed: 30 January 2025. .https://pen2txt.com/cgu

Our plan is to propose options to the sponsor of viable ways to achieve the goal of transcribing archival documents using AI.

Weeks 3 & 4

For our 3rd and 4th week we had a clear path of work to accomplish. Our ultimate goal with this project is to create a trained AI model within the Transkribus platform. The AI model(s) will be trained to a specific time period and/or writer. This should result in Artificial Intelligence trained to transcribe scanned hand-written documents. The output is searchable text that can be compiled and relied upon for historical research for years to come.

BUT, training an AI model requires something that we currently don't have. In order to train our AI model, we require 20-30 pages of 100% accurate transcribed texts and images. These should all be relevant to the time period/author that we are looking to train the AI model on. Because these files as of yet do not exist, we had to come up with a solution.

We utilized the Text Titan I AI model that comes standard on Tranksribus. The error rate is relatively high due to the fact that it is trained in many languages, many authors, and many time periods. From here, the output was text that came at about a 60-70% accuracy. In order to achieve 100% accuracy, we would either need to read through all of the text ourselves and make the corrections throughout. OR, we could come up with a different solution.

In the spirit of utilizing AI, we turned to Artificial Intelligence once to assist in what we have termed "post-processing." We took our output data, and configured a custom GPT model to make spelling corrections based on the vernacular of the Marshall Islands (our current focus with historical documents). Additionally, corrections to the text would avoid changing the given sentence structure. One of our follow-on datasets to provide will be for words, proper nouns and other key words, that were relevant to the time period and location. This trained post-processing model will assist us in quickly turning a 60-70& accurate document into 90-95% accurate document. Form there, only minor changes are required in order to come up with the training data that we will need for training our AI model. The following week will consist of our first training session.

Weeks 5 & 6

Training our First AI Model to Transcribe

Utilizing the Transkribus platform, we created a dataset of 100% transcribed documents. The minimum dataset required for a custom AI model is 20 documents and that's where we started. Achieving 20 100% transcribed documents proved to be time-consuming. Proper nouns seemed to be the most difficult for both the AI model to transcribe (in this context, we mean the built-in transcribing model on Transkribus that served as our starting point for these documents). In order to get all of the words right, we have to use manual transcribing of the documents as well.

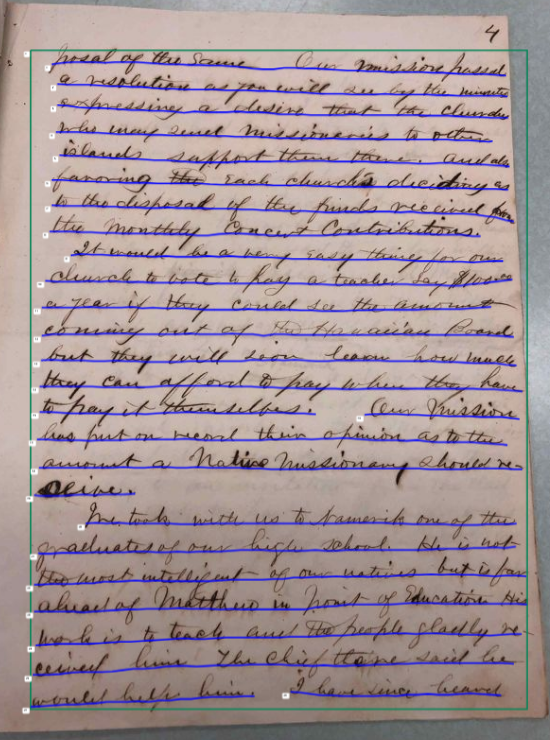

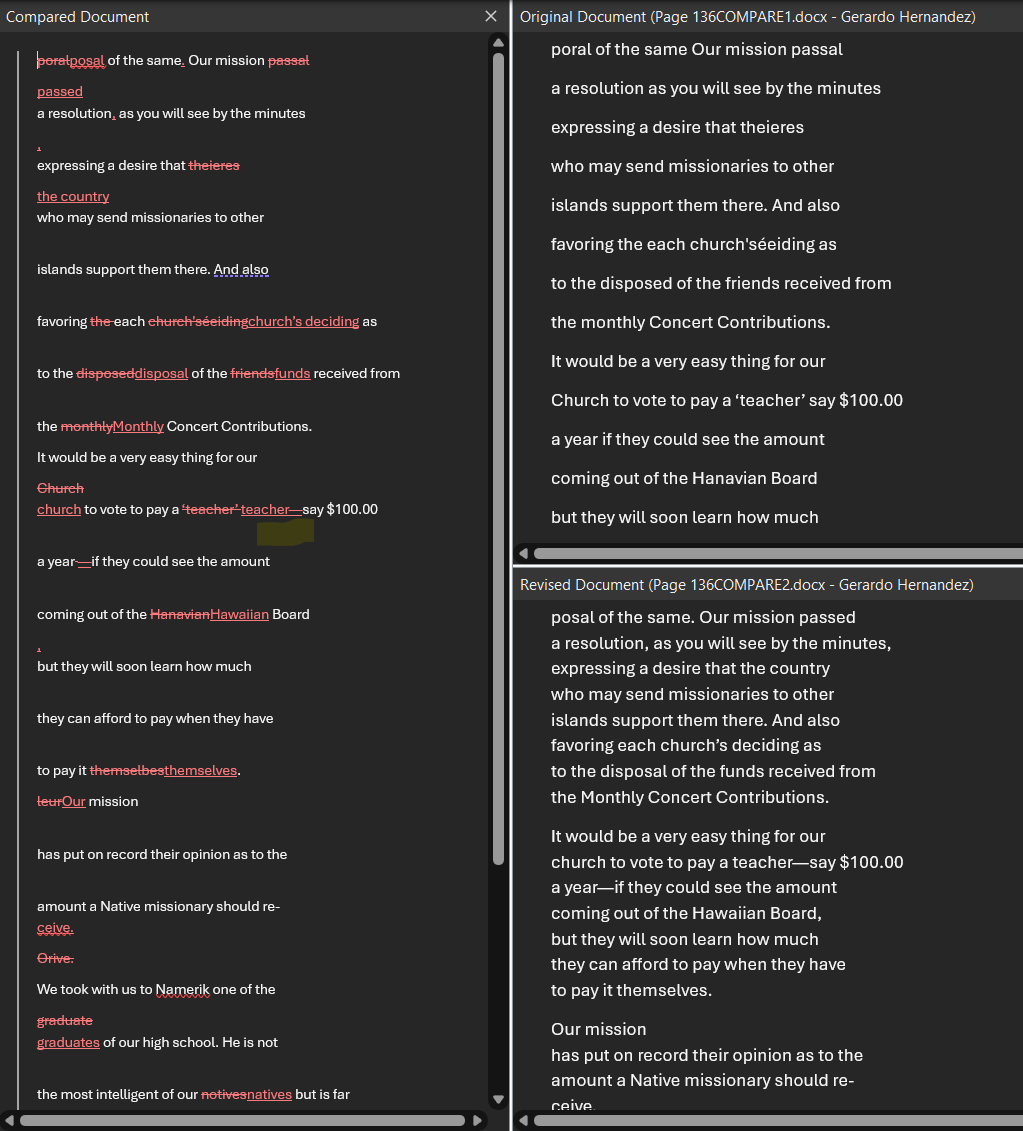

The transcribed dataset was used to train the AI model. Next, we would attempt to validate the accuracy of all of our sources thus far. To recap, we have:

- Source 1: Transkribus' built-in Text Titan Model

- Source 2: Transkribus' built-in model with Manual Corrections

- Source 3: Transkribus' built-in model with AI Post-Processing

- Source 4: Our Newly Trained AI model for Marshallese documents

Validating our Accuracy

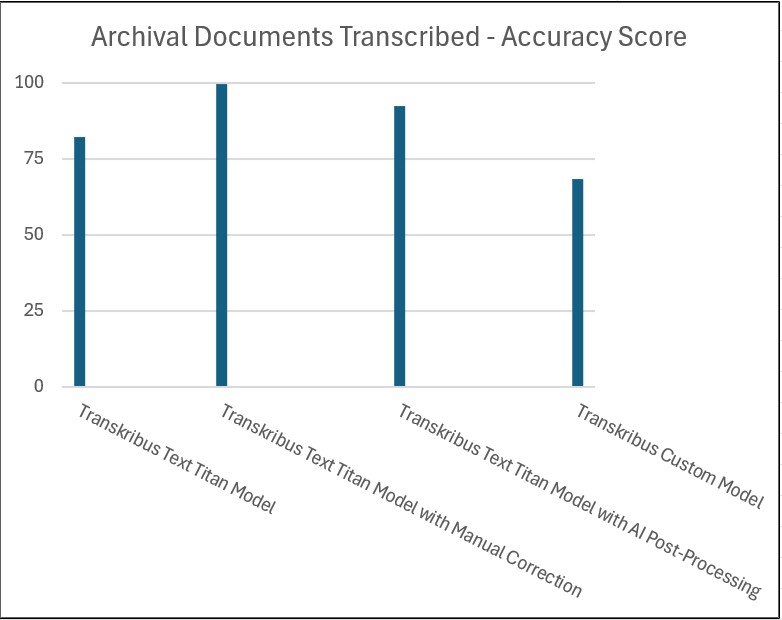

In order to continuously validate our progress, we took data samples at each step of the process. By comparing the accuracy obtained at each step to the 100% transcribed documents, we were able to come up with a simple graph to show our CURRENT state of accuracy.

|

|

The following graph shows our current accuracy ratings:

One of the next major milestones for the project will be to work with the sponsor to find a focus for the next step. Some options will be in how we can make a GUI or an automated process to both transcribe documents and build a searchable dataset for the sponsor to be able to locate documents by searched text.

Lessons Learned

Information to follow...